Help getting started with PetaPoco

I’ve recently spent some time working with PetaPoco. As always with something new I found problems early and often but not real problems with PetaPoco itself, just typical “What does this error mean?” and “How do I do this then?”

The kind of objects I want to use with PetaPoco are domain entities so there’s a bit more meat to them than a flat poco. Here are the test classes I started with :

public class Author

{

public int id {get; internal set;}

public string name {get; internal set;}

}

public class Article

{

public int id {get; internal set;}

public string content {get; internal set;}

public string title {get; internal set;}

public Author author {get; internal set;}

}

The property setters are internal so that client (application) code using the entities cannot change their values. The only way these properties would be changed is either :

- in the repository when the entities is being loaded (hydrated)

- during construction or through a builder class

- through public methods on the entities

In my real code I’ll use the InternalsVisibleTo attribute on the classes to ensure that outside of the domain classes only the repository assembly can access the setters. This test is stripped right back so the only remnant of these requirements are the internal setters. Also notice that there is a relationship between Article and Author; when I load an Article I want the Author to be brought back too. So now here are the learning curve issues I had :

Querying data

Let’s load a list of articles from the database and join the author table too :

db.Query(

"SELECT * FROM article left outer join " +

"author on author.id = article.author_id")

This throws an exception :

Unhandled Exception: System.ArgumentNullException: Value cannot be null. Parameter name: meth at System.Reflection.Emit.DynamicILGenerator.Emit(OpCode opcode, MethodInfo meth)

This happens because PetaPoco currently only looks for public setters when mapping to pocos and all my setters are internal. This is easily fixed in PetaPoco.cs by changing all instances of GetSetMethod() to GetSetMethod( true) (update: this change was added to PetaPoco core shortly after this post, so you won’t need to apply it)

Now I want to load a single article. Let’s try using the new multi-poco support :

var article = db.Single(

"SELECT * FROM article left outer join author" +

" on author.id = article.author_id");

This doesn’t compile because the multi-poco feature only applies to the Query() and Fetch() functions at the moment so I can only pass in the type of the result which is Article. The solution is simple :

var article = db.Query(

"SELECT * FROM article left outer join author " +

" on author.id = article.author_id where article.id=@0",

1).SingleOrDefault();

Inserting data

Ok let’s try and insert a new article. We’ll leave out the author for now and see if the foreign key constraint in the database kicks in.

int newRecordId = (int)db.Insert( new Article() {title="Article 1",

content="Hello from article 1"});

PetaPoco didn’t like it :

Unhandled Exception: Npgsql.NpgsqlException: ERROR: 42P01: relation “Article” does not exist at Npgsql.NpgsqlState.d__a.MoveNext()

I’m using PostgreSQL and it seems that it is case sensitive, if I change my class names to all lower case it works. But I don’t want to do that so all I need to do is use the TableName attribute which overrides the convention of class name equals table name :

[TableName( "author")]

public class Author

{

public int id {get; internal set;}

public string name {get; internal set;}

}

[TableName( "article")]

public class Article

{

public int id {get; internal set;}

public string content {get; internal set;}

public string title {get; internal set;}

public Author author {get; internal set;}

}

Now I can get a bit further but I still get an error :

Unhandled Exception: Npgsql.NpgsqlException: ERROR: 42703: column “author” of relation “article” does not exist at Npgsql.NpgsqlState.d__a.MoveNext()

This happens because my poco has an author property and this doesn’t map directly to the author_id column on the database. The author_id column is an integer, so how can I pass my poco into Update() and have it use an author id? You can’t. Instead you need to use a different overload of the Insert() method :

int newRecordId = (int)db.Insert( "article", "id", true,

new {title="A new article",

content="Hello from the new article",

author_id=(int)1});

We are now no longer using the poco, we are using an anonymous type. This is doubly useful because we now have the option to just update specific columns if we want to. Code calling my repository will still pass in a poco but the repository will strip out the values it wants to update. I like that.

In this example I already knew the author id because the user will have selected the author from a drop down while entering the article details.

Updating data

Now let’s update an existing article :

var newArticle = db.Query(

"SELECT * FROM article left outer join author on" +

"author.id = article.author_id where article.id=@0",

1).SingleOrDefault();

newArticle.content += " The end.";

db.Update( newArticle);

Here is this code’s exception :

Unhandled Exception: System.InvalidCastException: Can’t cast PetaPoco_MultiPoco_

Test.Author into any valid DbType.

at Npgsql.NpgsqlParameter.set_Value(Object value)

Again, PetaPoco can’t map the author property to a column on the article table. The way around this is the tell PetaPoco that the author property shouldn’t be used during inserts or updates. We use the ResultColumn attribute for this :

public class Article

{

public int id {get; internal set;}

public string content {get; internal set;}

public string title {get; internal set;}

[ResultColumn]

public Author author {get; internal set;}

}

Now we get another error, remember that I’m using PostgreSQL here :

Unhandled Exception: Npgsql.NpgsqlException: ERROR: 42703: column “ID” does not exist at Npgsql.NpgsqlState.

d__a.MoveNext()

This happens because PetaPoco will use “ID” as the default primary key if you don’t specify it. There are three solutions to this. Firstly I could change my database to use “ID” as the column name. Secondly I could change PetaPoco.cs to use “id” as the default. This would be done in the constructor for the PocoData internal class :

TableInfo.PrimaryKey = a.Length == 0 ? "id" : (a[0] as PrimaryKeyAttribute).Value;

Thirdly, and preferably, I’ll use the PrimaryKey attribute on my poco classes :

[TableName( "author")]

[PrimaryKey("id")]

public class Author

{

public int id {get; internal set;}

public string name {get; internal set;}

}

[TableName( "article")]

[PrimaryKey("id")]

public class Article

{

public int id {get; internal set;}

public string content {get; internal set;}

public string title {get; internal set;}

[ResultColumn]

public Author author {get; internal set;}

}

Also note that if we want to update the author we’d just do a separate call to Update(). If I find anything else worth sharing I’ll update this post

The move towards micro ORMs

Many of us know about the apparently recent appearance of micro ORMs thanks to Rob Conery’s Massive followed by Sam Saffron’s (and Stack Overflow’s) Dapper and more. However, having read around the web it is clear that micro ORMs have existed for as long as ADO.NET, but without the publicity. Some developers don’t like their SQL taken away from them and don’t like the idea of using a heavy ORM if they can help it. So they write their own object mapper that works for them. Of course there are many advantages to using a mature fullsome ORM, it just depends on how you look at it and what’s important in your world

I’ve been resisting using LINQ to SQL / EF / NHibernate partly because I was concerned about efficiency and control but mainly because at work I’ve just not needed to change the data access layers nor have I had the opportunity to do so. I inherited a data access layer that mainly uses stored procedures and ADO.NET and there’s no need to change this. There’s nothing wrong with it and it’s stable and switching to an ORM would be virtually impossible. Does a stored procedure heavy application even suit an ORM? Perhaps for reads

But at home it’s different

At home it’s different. I’m building an application that is still in it’s early stages so I can spend time experimenting with micro ORMs. From what I’ve seen so far I like them all because :

- they are closer to the metal

- the source code is right there for you to step into

- they are much faster than full ORMs

- the learning curve is much shorter

The downside is that you need to know a little SQL to make the most of them, but this excites me! SQL is already a mature ubiquitous DSL for accessing data. If you can talk this language you are at a serious advantage than if you’re relying on an ORM to generate the SQL for you. Dapper and PetaPoco both share a slight optimisation complication in that they both dynamically generate poco mapping methods for your pocos using System.Reflection.Emit.ILGenerator. I tend to skip past that bit in the source for now  but guess what… these two are the fastest C# based micro ORMs available right now

but guess what… these two are the fastest C# based micro ORMs available right now

I’ve experimented a little with Massive, Simple.Data, Dapper and PetaPoco with PetaPoco looking like my personal favourite. I’ve spent most of my experimentation time with PetaPoco so I’ll follow this post up with a list of learning curve problems I had.

Learning new stuff as a developer

Constant learning is vital to most careers but it is especially vital for developers. Learning is rewarding and addictive, but also difficult. It takes time, determination and motivation to push yourself out of your comfort zone and reach the next camp in the ascent of your career. There’s no room for stupid pride either so be prepared to :

- ask stupid questions of people who know what you want to know

- feel confused after reading that page for the third time

- bow to those of superior knowledge

- feel overwhelmed

Ultimately what really matters is that you slowly but surely insert information into your databrain and keep it there.

I started to take learning seriously about 6 years ago. Prior to that I was in the pleasure zone known as IGNORANCE where I thought I knew all I needed to and that I was somehow immune to having to learn stuff. That’s ignorance all over, you don’t know what you need to know so it doesn’t exist, right? Slowly that changed as I started to buy more tech books and write more code in my spare time. My delusions of grandeur were tested to their brittle limits

But I had a problem…. I wasn’t very good at learning. I would read a book but nothing much would happen. I’d forget things I’d read about or used before, or I’d have no frame of reference on which to hang new information. That’s when I begun to take learning how to learn seriously.

Learning how to learn. Is that right?!

Yep that’s right there are loads of techniques for learning stuff. I started with the assumption that I had problems committing information to memory so I got into mnemonics and other memory techniques. I bought half a dozen (that’s six) of the Tony Buzan books and came up with elaborate story methods, journey methods, location methods, peg methods and the beginnings of a number system. Got nowhere with those as I wasn’t able to (or didn’t try hard enough to) translate the kind of programming information I was learning into any of these methods. After about six months (that’s half a dozen) I scrapped it, then moved onto mind maps.

Free your mind with FreeMind

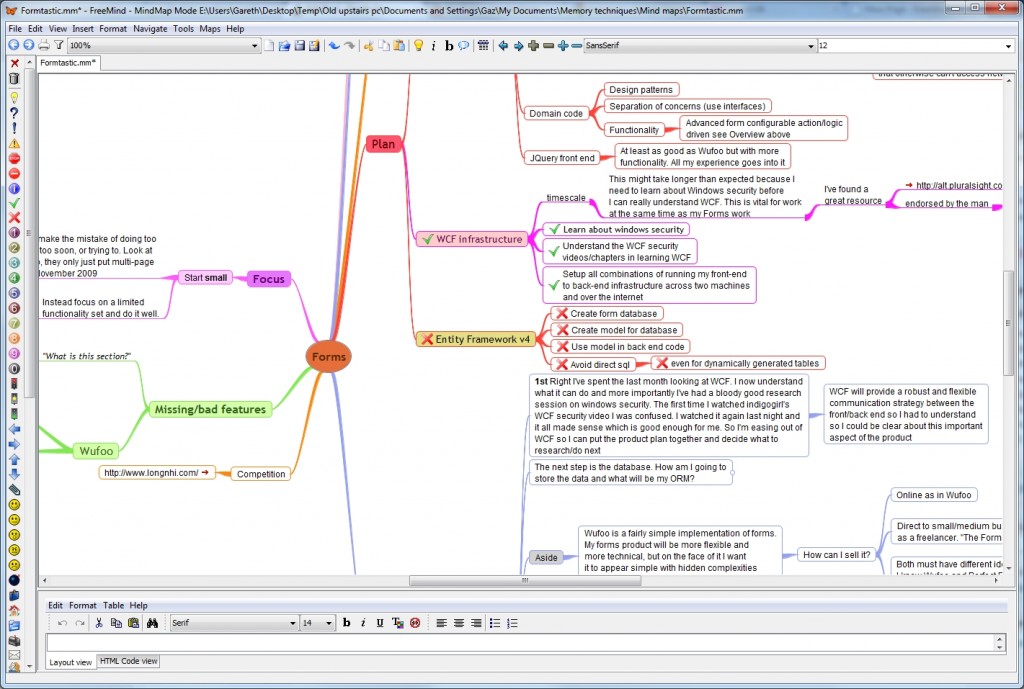

I had a great time with FreeMind. The first thing I did was change the default style and colours to this custom patterns.xml file because out of the box it looks bland (or it did when I started using it). I find mind maps to be a great way of organising information together but in the end I concluded that they are clunky, non-portable and complex. I know the whole point of a mind map is to keep it simple and use minimal and sharp wording but is this suited to learning typical programmer information? Look at this fraction of one of my mind maps and you tell me :

And here’s the same mindmap fully expanded :

Viewing these mind maps is only possible in FreeMind itself (although you can export to a web page and PDF now too). The other problem is that the mind maps are just visually huge and the interface is fiddly. Finally there are just too many little steps to take when you want to note something down. And that’s the whole problem. When learning stuff I want to take notes immediately and move on. For me it has to be transient, simple and unobtrusive.

I still use FreeMind but not for taking learning notes.

In my next post I’ll emit more off-scented verbage about where this all ended up and how I take notes nowadays.

In a nutshell it’s a mixture of low tech and high tech :

- 6×4″ index cards

- The excellent ZIM wiki

- A Samsung Galaxy Tab

- The Kindle app

- Photo scanner